School Administrator Magazine

AASA's award-winning magazine provides big-picture perspectives on a broad range of issues in school system leadership and resources to support the effective operation of schools nationwide.

Current Issue

School Administrator: Reframing the Narrative on Public Schools

Advertisement

Previous Issues

-

December 2025: School Administrator

December 2025: School AdministratorThis issue examines how public school districts can build strong relationships with their communities and make their schools a top choice for families.

-

November 2025: School Administrator

November 2025: School AdministratorThis issue examines how superintendents apply their faith to their leadership. Plus, finding common ground on religion in public schools.

-

October 2025: School Administrator

October 2025: School AdministratorThis issue examines how school districts are expanding new methods of tutoring and professional coaching.

-

September 2025: School Administrator

September 2025: School AdministratorThis issue examines solutions and strategies for superintendents handling enrollment decline, school mergers and closures in their school districts.

-

August 2025: School Administrator

August 2025: School AdministratorThis issue explores various types of literacy and how school districts are teaching much-needed lifelong skills.

-

July 2025: School Administrator

July 2025: School AdministratorThis digital-only issue compiles articles and columns of the past year examining school culture wars, superintendents as education advocates, artificial intelligence, parenting in the public eye and much more.

-

June 2025: School Administrator

June 2025: School AdministratorThis issue shares inspiration and practical ideas from 2025 state superintendent of the year winners, addressing how to combat misinformation, pass a tax levy after multiple failures and demonstrate a district’s return on investment.

-

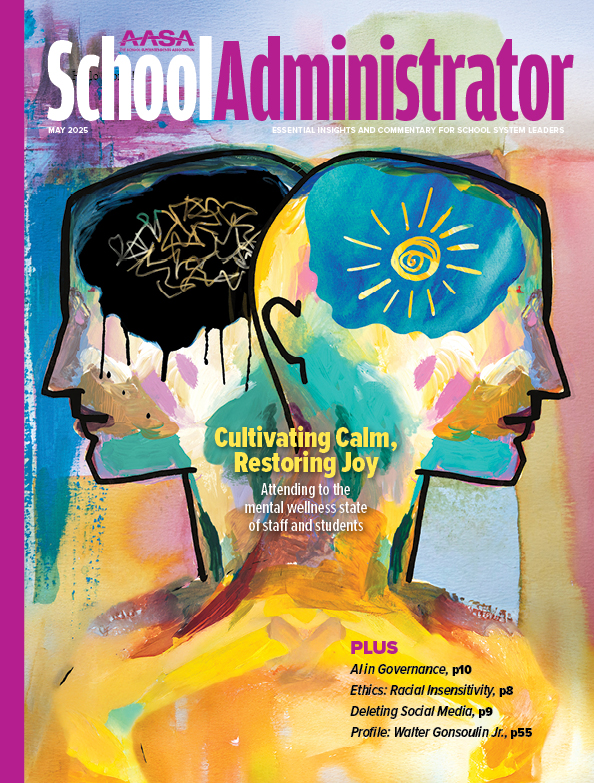

May 2025: School Administrator

May 2025: School AdministratorThis issue dives into how school districts can create safe and supportive school environments for their staff and students.

School Administrator Staff

Advertise in School Administrator

For information on advertising with AASA, contact Kathy Sveen at 312-673-5635 or ksveen@smithbucklin.com.

Advertisement

Advertisement